A Support Vector Machine in just a few Lines of Python Code

A Support Vector Machine in just a few Lines of Python Code

Content created by webstudio Richter alias Mavicc on March 30. 2017.

In the last tutorial we coded a perceptron using Stochastic Gradient Descent. The perceptron solved a linear seperable classification problem, by finding a hyperplane seperating the two classes.

The point here is, that the perceptron just finds one possible hyperplane, not necessarily the optimal hyperplane. That can lead to lower generalization performance.

Here Support Vector Machines (SVM) come into place. In contrast to perceptrons, SVMs try to find a hyperplane, which maximizes the margin between two classes.

As last time, we will focus on developing the code, not so much the theory of SVMs.

I highly advise you the book of Schölkopf & Smola. Do not let the math scare you, as they explain the basics of machine learning in a really comprehensive way:

Schölkopf & Smola (2002). Learning with Kernels. Support Vector Machines, Regularization, Optimization, and Beyond.

Furthermore there is a short introduction to the mathematical backgrounds of a SVM in the following presentation:

An Idiot’s guide to Support vector machines (SVMs)

An overview of some more tutorials you can find here:

Give me the Code!

import numpy as np

X = np.array([

[-2,4,-1],

[4,1,-1],

[1, 6, -1],

[2, 4, -1],

[6, 2, -1],

])

y = np.array([-1,-1,1,1,1])

def svm_sgd(X, Y):

w = np.zeros(len(X[0]))

eta = 1

epochs = 100000

for epoch in range(1,epochs):

for i, x in enumerate(X):

if (Y[i]*np.dot(X[i], w)) < 1:

w = w + eta * ( (X[i] * Y[i]) + (-2 *(1/epoch)* w) )

else:

w = w + eta * (-2 *(1/epoch)* w)

return w

w = svm_sgd(X,y)

print(w)

[ 1.58876117 3.17458055 11.11863105]

Our Ingredients

First we will import numpy to easily manage linear algebra and calculus operations in python. To plot the learning progress later on, we will use matplotlib. The third line just allows matplotlib to plot the graphs directly in the jupyter notebook.

import numpy as np

from matplotlib import pyplot as plt

%matplotlib inline

Stochastic Gradient Descent

As for the perceptron, we use python 3 and numpy. The SVM will learn using the stochastic gradient descent algorithm (SGD). SGD minimizes a function by following the gradients of the cost function. For further details see:

Wikipedia - Stochastic Gradient Descent

Calculating the Error

To calculate the error of a prediction we first need to define the objective function of the SVM.

Hinge Loss Function

To do so, we need to define the loss function, to calculate the prediction error. We will use hinge loss for our SVM:

$c$ is the loss function, $x$ the sample, $y$ is the true label, $f(x)$ the predicted label.

This means the following:

So consider, if y and f(x) are signed values $(+1,-1)$:

- the loss is 0, if $y*f(x)$ are positive, respective both values have the same sign.

- loss is $1-y*f(x)$ if $y*f(x)$ is negative

Objective Function

As we defined the loss function, we can now define the objective function for the SVM:

As you can see, our objective of a SVM consists of two terms. The first term is a regularizer, the heart of the SVM, the second term the loss. The regularizer balances between margin maximization and loss. To get more information I advice you the tutorial introduction of the above adviced Schölkopf & Smola book.

Derive the Objective Function

To minimize this function, we need the gradients.

As we have two terms, we will derive them seperately using the sum rule in differentiation.

This means, if we have a misclassified sample $x_i$, respectively $y_i \langle x_i,w \rangle \ < \ 1$, we update the weight vector w using the gradients of both terms, if $y_i \langle x_i,w \rangle \geq 1$ we just update w by the gradient of the regularizer. To sum it up, our SGD for the SVM looks like this:

if $y_i⟨x_i,w⟩ < 1$: else:

Note here the difference to the perceptron. The weight vector is updated by the regularizer gradient, even if the samples is classified correctly.

Our Data Set

First we need to define a labeled data set. If you read the perceptron tutorial you will already know it.

X = np.array([

[-2, 4],

[4, 1],

[1, 6],

[2, 4],

[6, 2]

])

y = np.array([-1,-1,1,1,1])

For simplicity’s sake we again fold the bias term into the data set:

X = np.array([

[-2,4,-1],

[4,1,-1],

[1, 6, -1],

[2, 4, -1],

[6, 2, -1],

])

y = np.array([-1,-1,1,1,1])

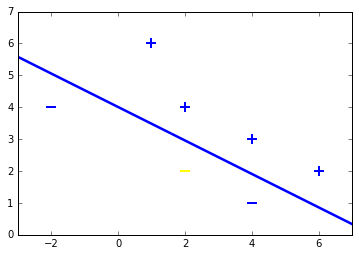

This small toy data set contains two samples labeled with $-1$ and three samples labeled with $+1$. This means we have a binary classification problem, as the data set contains two sample classes. Lets plot the dataset to see, that is is linearly seperable:

for d, sample in enumerate(X):

# Plot the negative samples

if d < 2:

plt.scatter(sample[0], sample[1], s=120, marker='_', linewidths=2)

# Plot the positive samples

else:

plt.scatter(sample[0], sample[1], s=120, marker='+', linewidths=2)

# Print a possible hyperplane, that is seperating the two classes.

plt.plot([-2,6],[6,0.5])

[<matplotlib.lines.Line2D at 0x7f8697c13e80>]

Lets Start implementing Stochastic Gradient Descent

Finally we can code our SGD algorithm using our update rules.

To keep it simple, we will linearly loop over the sample set. For larger data sets it makes sence, to randomly pick a sample during each iteration in the for-loop.

def svm_sgd(X, Y):

w = np.zeros(len(X[0]))

eta = 1

epochs = 100000

for epoch in range(1,n):

for i, x in enumerate(X):

if (Y[i]*np.dot(X[i], w)) < 1:

w = w + eta * ( (X[i] * Y[i]) + (-2 *(1/epoch)* w) )

else:

w = w + eta * (-2 *(1/epoch)* w)

return w

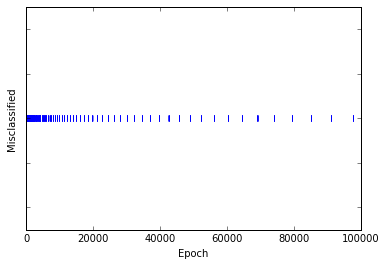

We will run the sgd $100000$ times. Our learning parameter eta is set to $1$. As a regulizing parameter we choose $1/epoch$, so this parameter will decrease, as the number of epochs increases.

Code Description Line by Line

line 2: Initialize the weight vector for the perceptron with zeros

line 3: Set the learning rate to 1

line 4: Set the number of epochs

line 6: Iterate n times over the whole data set. The Iterator is begins with $1$ to avoid division by zero during regularization parameter calculation

line 7: Iterate over each sample in the data set.

line 8: Misclassification condition $y_i \langle x_i,w \rangle < 1$

line 9: Update rule for the weights $w = w + \eta (y_ix_i - 2\lambda w)$ including the learning rate $\eta$ and the regularizer $\lambda$

line 11: If classified correctly just update the weight vector by the derived regularizer term $w = w + \eta (-2\lambda w)$.

Let the SVM learn!

Next we can execute our code, to calculate the proper weight vector, which fits our training data.

def svm_sgd_plot(X, Y):

w = np.zeros(len(X[0]))

eta = 1

epochs = 100000

errors = []

for epoch in range(1,epochs):

error = 0

for i, x in enumerate(X):

if (Y[i]*np.dot(X[i], w)) < 1:

w = w + eta * ( (X[i] * Y[i]) + (-2 *(1/epoch)* w) )

error = 1

else:

w = w + eta * (-2 *(1/epoch)* w)

errors.append(error)

plt.plot(errors, '|')

plt.ylim(0.5,1.5)

plt.axes().set_yticklabels([])

plt.xlabel('Epoch')

plt.ylabel('Misclassified')

plt.show()

svm_sgd_plot(X,y)

The above graph shows that the SVM makes less misclassifications the more epochs it is running. In contrast to our perceptron we do not reach zero errors permanently, as the SVM updates its weight vector by the regularizer, even if the current samples is correctly classified. What looks like a bad deal, is the strengh of the SVM, as it always tries to maximize the margin between the two classes.

To sum this up, the perceptron is satisfied, when it finds a seperating hyperplane, our SVM in contrast always tries to optimize the hyperplane, by maximizing the distance between the two classes.

The weight vector of the SVM including the bias term after 100000 epochs is $(1.56, 3.17, 11.12)$.

We can extract the following prediction function now:

The weight vector is $(1.56,3.17)$ and the bias term is the third entry 11.12.

Evaluation

Lets classify the samples in our data set by hand now, to check if the SVM learned properly:

First sample $(-2, 4)$, supposed to be negative:

Second sample $(4, 1)$, supposed to be negative:

Third sample $(1, 6)$, supposed to be positive:

Fourth sample $(2, 4)$, supposed to be positive:

Fifth sample $(6, 2)$, supposed to be positive:

Lets define two test samples now, to check how well our perceptron generalizes to unseen data:

First test sample $(2, 2)$, supposed to be negative:

Second test sample $(4, 3)$, supposed to be positive:

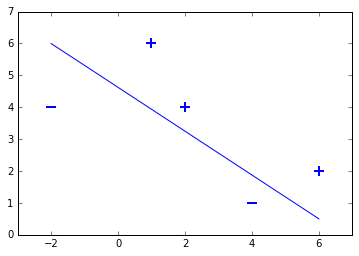

Both samples are classified right. To check this geometrically, lets plot the samples including test samples and the hyperplane.

for d, sample in enumerate(X):

# Plot the negative samples

if d < 2:

plt.scatter(sample[0], sample[1], s=120, marker='_', linewidths=2)

# Plot the positive samples

else:

plt.scatter(sample[0], sample[1], s=120, marker='+', linewidths=2)

# Add our test samples

plt.scatter(2,2, s=120, marker='_', linewidths=2, color='yellow')

plt.scatter(4,3, s=120, marker='+', linewidths=2, color='blue')

# Print the hyperplane calculated by svm_sgd()

x2=[w[0],w[1],-w[1],w[0]]

x3=[w[0],w[1],w[1],-w[0]]

x2x3 =np.array([x2,x3])

X,Y,U,V = zip(*x2x3)

ax = plt.gca()

ax.quiver(X,Y,U,V,scale=1, color='blue')

<matplotlib.quiver.Quiver at 0x7f8695950710>